Dev Diary 8: Access Controls and thinking about the Common Workflow Language in the context of in-field data collection

Including a short demonstration of some of the improved notebook access controls we've developed

In this week’s dev diary, Brian shares a video of our new advanced authentication and visibility controls, reflects on a recent paper on the common workflow language, and how FAIMS could relate to that data processing and analysis ecosystem.

Status Update

Work continues on our CSIRO (A&F)-sponsored features as we get closer to our delivery date. The two major feature that have wrapped up1 are our access/visibility controls and the ability to disable downloads of server-side images. We’ve modelled a UI for record-conflict resolution and are currently developing that necessary functionality.

We are also supporting CSIRO Mineral Resources taking FAIMS3 into the field this weekend. This first collaboration with CSIRO started with a quick proof of concept in 20142 and now they are our first beta testers for the ARDC sponsored side of the project. With any luck, we’ll have photos and video from their first experiences with FAIMS3 in the wild soon!

Our field-ready set of notebooks continues to grow, with a Groundwater sampling for MQ prepared and a port of the Blue Mountains survey (as Penny described on our last blog). Slightly earlier in development, Adela Sobotkova has used the notebook creator to prepare a first draft of the TRAP Burial Mounds 2.6 module migration into FAIMS3 notebook form. The rate of increase of notebooks is increasing!3

Record Visibility & Access Controls

While the ability to prevent huge downloads when colleagues are happily snapping away documenting features is excellent, that feature requires little comment or explanation.4

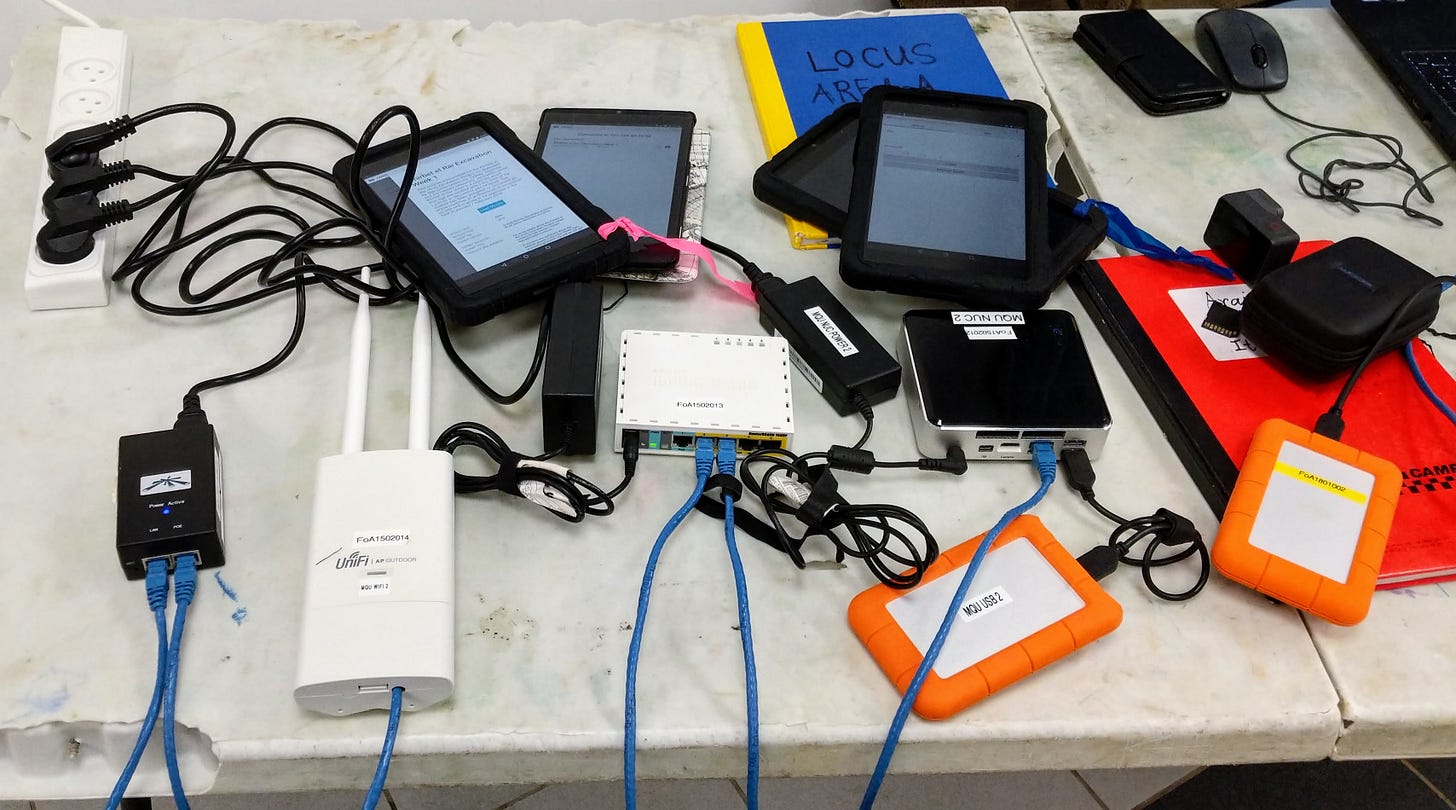

However, with this new feature, we mark another important step beyond our old FAIMS 2 lineage. In the olden days, all users on a server would be able to see all modules notebooks on a server, and every record within each of those notebooks. Access control and security were designed around airgaps and the pragmatic realities of fieldwork in small teams. If a team needed isolation, they would be given their own server-in-a-box:

Issuing multiple servers-inna-box, unfortunately, doesn’t scale to more complicated projects. (We hope to provide far simpler photos of a FAIMS3 offline server setup soon… soon…) Instead, we have designed multiple roles for visibility and access control on a FAIMS3 server:

Administrator

Supervisor/Notebook Administrator

User

A server administrator will have unlimited read/write access to all notebooks on a server and will be able to see all notebooks on a server. This is the old FAIMS 2 experience. However, for projects and servers that need more nuanced access, we present the roles of “Supervisor/Notebook Administrator” and “User/Team member.”

A supervisor/Notebook Admin will be able to read and write to all records within a certain notebook. But having a notebook administrator role will not provide them the ability to see other notebooks on a server. In this fashion, large enterprises can enforce notebook isolation between various teams, while having a large-scale auditing capability across the enterprise.

A User/Team member will have specific-notebook-only access. In their notebook, they will only be able to see and write to records they, themselves, have created. In this fashion, volunteers, subcontractors, or undergrads can have reduced distractions while ensuring that each user can view only their own records. While this user-mode isn’t for everyone—this record level visibility control is essential for some larger projects and is part of our road to citizen science!5

Reflections on a Common Workflow Language

Crusoe et al recently wrote in the Communications of the ACM: Methods Included, an article on the Common Workflow Language (CWL). In their words, the CWL is:

a pragmatic set of standards for describing and sharing computational workflows. …

In many domains, workflows include diverse analysis components, written in multiple, different computer languages by both end users and third parties. Such polylingual and multi-party workflows are already common or dominant in data-intensive fields, such as bioinformatics, image analysis, and radio astronomy. We envision they could bring important benefits to many other domains.

To thread data through analysis tools, domain experts such as bioinformaticians use specialized command-line interfaces,12,31 while experts in other domains use proprietary, customized frameworks.2,5 Workflow engines also help with efficiently managing the resources used to run scientific workloads.7,10

The workflow approach helps compose an entire application of these command-line analysis tools: Developers build graphical or textual descriptions of how to run these command-line tools, and scientists and engineers connect their inputs and outputs so that the data flows through.

I.e. one the researchers have the data (a problem which FAIMS3 ably solves) — they now need to clean, process, and refine the data. To be clear, the CWL is designed for “Big Data”6 produced by large and laboratory based machines, rather than the “Small Data”7 regimes which so characterise archaeology, ecology, and other fieldwork-based disciplines. However, one thing that Shawn and I are pushing for in our drive to bring preregistration to archaeology, is that data cleaning and analysis can begin before the spade touches the ground.

While the current goal is to encourage the use of scripts and small programs instead of manual cleaning and analysis in Excel, our vision has always been towards the ability to contribute large amounts of data which could be integrated into a multi-stage analysis using tools like the CWL. Our push towards open linked data, community standard vocabularies, and shared data collection workflows is fully aimed at larger-scale analysis like the CWL.

Even absent a sophisticated multi-step multi-computer setup like the CWL envisions, the best time to develop an analysis workflow (on whiteboard, paper napkin, or what have you) is right after pilot data is collected. Rather than waiting for full-scale data to come in from fieldwork, developing an analysis suite that can act on your planned data formats and produce useful outputs can provide timely and essential feedback during fieldwork as well as during analysis. The ability to “re-run the analysis” by typing a single-command allows for earlier detection of errors or interesting elements worth attention.

The CWL authors complain:

While (data) standards are commonly adopted and have become expected for funded projects in knowledge representation fields, the same cannot yet be said about workflows and workflow engines.

Nor could it be said about data collection fields. And, to be fair, we are aware of no standards that our XML (FAIMS 2) or JSON (FAIMS3) should be compatible with. But the idea of interoperability of analysis across workflow-platforms should be extensible to interoperability of data collection! One day, it would be amazing if we could use something like the CWL to define a set of inputs which would then thereby create a FAIMS (X) notebook with all necessary hooks to feed the data back into the analysis system. The idea that an analysis package/workflow could generate a way of collecting its necessary inputs is future-facing indeed! Shout out if you know any projects in need of this solution!

What I’m reading

Greg Wilson on effective abstracts in software papers

Scott Alexander on DALL-E-2 and stained glass windows

The woeful security situation of NSW Digital Drivers Licenses

Jeff Epler’s history of the Story of Mel (one of the fundamental tales of hackerdom)

Not counting a command line external-data-editor that would be virtually impossible to demo on video in any meaningful way

Which… seems a very long and very short time ago — I do wish that my mental conception of time would make up its mind.

Speaking as the sysadmin, this is a terrifying trend. Speaking as product owner, this is awesome.

Would you like a surprisingly large mobile data bill when connecting to a server? No? Excellent.

Please contact us if you have a spare six figures and a pressing need to collect citizen science data at scale!

Big Data, n.: Data which does not fit on my laptop for analysis.

Christine Borgman (2015) Big Data, Little Data, No Data Scholarship in the Networked World.